You are here

Parallel Compilers case studies

Examples of Parallel Compilers

1 Parafrase Fortran reconstructing compiler: Parafrase is an optimizing compiler preprocessor that takes scientific Fortran code, constructs a program dependency graph and performes a series of optimization steps that creates revised version of the original program and optimize it for high speed architecture.

2. Bulldog Fortran reassembling compiler: The Bulldog compiler is aimed at automatic parallelisation at the instruction level. It is designed to catch parallelism not amenable to vectorization. It exploits parallelism within the basic block.

3. Cilk2c compiler: this is a clone compiler which converts CILK source code to C source code.

4. F90 / High Performance Fortran (HPF) : High performance Fortran extends F90 to support data parallel programming. Compiler directives allow programmer specification of data distribution and alignment. New compiler constructs and intrinsics allow the programmer to do computations and manipulations on data with different distributions.

1. Parallel Compiler with Grain size of Instruction

Compilation algorithms have been developed which can be implemented on a global highly-parallel machines. Illiac IV is a machine of this type in which the processing elements form a two dimensional array . PEPE is a machine of this type in which the processing elements form an unstructured associative ensemble. One of the eminent design factors in a parallel compiling environment is the under-lying data structure and organization which shall be used. Two organizations, called the horizontal and the vertical data organizations, have been explored along with their implications for the compiling process. To test the algorithms a Fortran compiler was designed and various portions of the compiler were implemented on the PEPE model which was build at Bell Telephone Laboratories. The compiler was designed to translate from Fortran II into IBM 360 Assembly Language. To program the parallel machine abbreviated Fortran was used.

The Machine

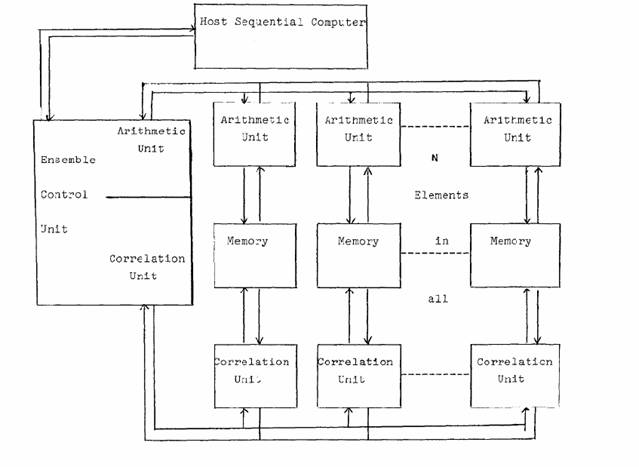

PEPE, which is an abbreviation for the Parallel Element Processing Ensemble, consists of an array of identical processing elements all having a common control unit (see Figure i). Each processing element contains the registers and adder logic of a parallel arithmetic unit and a 512 word random-access memory(32 bits per word). In keeping with the global nature of the machine, an element contains a minimum of control logic, the bulk of the control being concentrated in the common control unit. This implies that the control unit decodes and issues instructions to all processing elements simultaneously so that the elements are required to execute exactly the same instruction at exactly the same time. The elements are capable of executing a complete single address instruction repertoire permitting any desired arithmetic or logical operation. Features which add flexibility to this machine architectures are:

- Each element can process separate data from its own local memory or global data

- Facilities are available for designating a subset of the set of processing elements to participate in a particular instruction;

- Indexing within elements (which is currently not available on the PEPE machine) allows the various elements to access different word locations within their memories.

Figure 2 : PEPE architecture

Instructions reside in a sequential machine attached to PEPE called the host computer. This machine (an IBM/ 360/65) acts as a Job partner to the parallel machine and performs the non-parallel tasks which need to be done. Notice that in this example, the global control feature is illustrated whenever we address one specific word, because we actually address that word simultaneously in all of the elements. A major component of each element is a special-purpose concurrent input unit, called the correlation unit, which is made up of a number of associative registers.

The operations within this unit and in the control section associated with the arithmetic unit use associative techniques to provide novel capabilities for data input and retrieval. The addressing of processing elements by content rather than by element number allows the software to be independent of the ensemble size, and makes the ensemble more fault tolerant.

Parallel Parsing - The Horizontal Approach

The process of parsing and generating code for an arithmetic expression has been attacked using many different techniques all of whose processing times are directly proportional to the length n of the arithmetic expression to be processed i.e. t p = O(n k ), k > 0. This length can be counted in terms of the number of characters in the expression, or in terms of the number of operators and operands. The processing time can be counted in terms of the number of comparisons required or other measures which fit particular algorithms. An algorithm is presented which requires a fixed time which is always less than or equal to the time in the sequential analog of this parallel algorithm. This represents a substantial speed-up for long expressions, and a similar speed-up is possible for short expressions if the implementation allows processing many statements at once. Given that a stream of source text has been lexically analyzed and is ready to be put into the parallel machine as a string of tokens, we can spread these tokens horizontally across the elements, i.e., token 1 in element 1, token 2 in element 2, etc., or we can put all of the first k1 tokens into element 1, the next k2 tokens into element 2 etc. in a vertical organization. The difference in approach is tremendous. A horizontal organization allows one to operate on all tokens of a statement simultaneously to take advantage of intra-statement parallelism. The vertical organization in the context of our FORTRAN compiler might mean one statement per element in which case many statements might be processed simultaneously to gain speed via inter-statement parallelism. These contrasting organizational philosophies can also be extended to code optimization. Thus it was found that the horizontal organization is quite convenient for fast local optimization whereas the vertical organization is quite superior for peephole optimization and global optimization. The following syntactical analysis algorithm will be presented in conjunction with an example to illustrate the various steps.

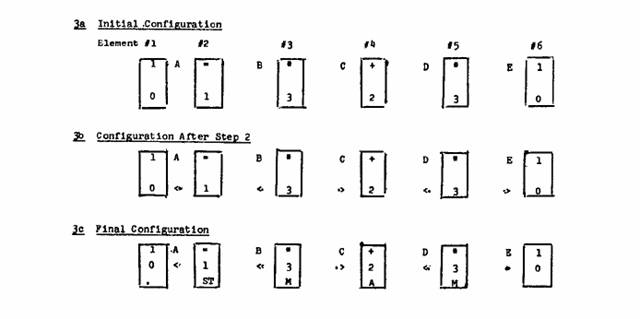

Step I: Input the tokens representing operators horizontally to parallel elements with precedence numbers attached to all operators. Place all operand tokens which are between a pair of operators in storage between the corresponding elements. Consider the example FORTRAN statement A=B*C+D*E. It would get placed into the parallel elements as shown in Figure 3a below. The precedence numbers are assigned in this example with larger numbers indicating operators which must be applied first; thus multiplication is done before addition is done before assignment. The symbol is used as a delimiter placed before and after a statement, and when this algorithm is expanded to process multiple statements, the symbol separates statements. It has lowest priority (0).

Note that Step I is really an input step which is assumed to be the lexical analysis phase and as such is not a part of the syntactical analysis process but simply sets up its initial conditions. Similarly, the output process after syntactical analysis is not considered a part of the syntactical analysis process, partly because the I/0 can be arranged to partially overlap with other processing.

Step 2 : Calculate relative precedence by comparing the precedence of each neighboring pair of operators. The result of this step on the example expression is shown in Figure 3b below. This is clearly a parallel step.

Step 3: Generate low level operation codes for the intermediate language. This is also a parallel step. For this example we use the mneumonics M for multiply, A for add, and ST for store. Then the parallel elements after Step 3 look like Figure 3c below. After Step 3, the parsing and code generation problem has essentially been reduced to one of sorting and outputting a list of instructions.

Figure 3 : Parsing arithmetic statement A = B*C+D*E using parallel elements

Step 4 : Output code. This can be accomplished within PEPE by accessing elements on information content rather than on element number. The intermediate code generated in the example being considered is :

M B,C,< >

M D,E,< >

A C,D,< >

ST A,B,< >

This intermediate code can then be optimized and transformed into the desired code, or alternatively one can take advantage of the parallel structure of the expression while it is within PEPE and do some local optimization in parallel.

Parallel Parsing - The Vertical Approach

The vertical approach to parsing requires placing successive related tokens in the same processing element. This may mean that all tokens of a single statement within a program to be compiled are placed in a single element. Then a syntax-directed top-down technique for parallel syntactical analysis and generation of intermediate language code can be implemented as follows. Within each element a region in memory is designated the S-string region (denoted by the variable S) which contains the input to the syntactical analyzer which consists of a string of tokens stored in consecutive locations and representing a statement.

A second region designated the C-structure region (denoted by the variable C) will contain the output of the syntactical analyzer. Pointers into these regions must be maintained as parallel variables (i.e. vectors with one element of the vector in each processing element) called SPNTR and CPNTR respectively. Also, a parallel variable called VALUE will contain a vector of zeroes and ones. Since a top-down parser is goal oriented, whenever a sub-goal is attempted, all elements in which the sub-goal was attained will return from the subroutine with VALUE=1 implying success. All elements which do not succeed will have VALUE=O when control is returned from the subroutine. Typical of the goal oriented routine is the statement recognizer (abbreviated STMT) whose goal is to recognize a single Fortran statement. It attempts to do this by calling other routines whose goals are to recognize and generate intermediate language for particular types of statements

SUBROUTINE STMT

CALL DOSTMT

CALL IFSTMT

CALL GOTOSTMT

A typical processing element would contain, as input to the syntactical analyzer, a list of tokens

which are resident in a processing element memory and which represent a Fortran IF statement.

The program segment will process the statement within this element and simultaneously

all IF statements in other elements. The program segment is written in the P-FOR* (abbreviating Parallel Fortran) language

The Symbol Table Routines

The PEPE hardware, previously described for associative processing, allows the implementation of very efficient table manipulation routines if one utilizes a horizontal data organization to store the symbol table within the parallel machine. For the language studied for implementation (Fortran II), variable names can contain five or less characters. By encoding characters in a 6 bit representation, five characters can be packed into a 32 bit PEPEword. This allows a very fast table look-up of any variable name by a single match of one PEPE word provided variables are all stored in the same word of different elements, and provided the table size is less than or equal to the number of elements so that no overflow table (which could also be in the parallel machine) is needed. One can also detect whether a variable name is within the table and if not, input a new entry quite easily using this data organization.

2. Parallel Compiler with Grain size of a Procedure

Compiler for Warp, a systolic array is a cross compiler that executes on workstations and produces optimized code for each of the processing elements of the systolic array. The target of the compiler is a multi-computer, each of the processors in the array contains local program and data memories. Quite frequently an application program for the Warp array contains different programs for different processing elements. Due to its high communication bandwidth, Warp is a good host for pipelined computations where different phases of the computation are mapped onto different processors. This usage model encourages the design of algorithms which result in different programs for the individual processors and this observation initiated our investigation into parallel compilation, since the program for each processor can be compiled independently. One of goals is to assess the practical speedup.

Source programs :

The structure of the programming language for Warp reflects the underlying architecture of the machine: A Warp program is a module that consists of one or more section programs which describe the computation for a section (group) of processing elements. Section programs contain one or more functions and execute in parallel. Because section programs execute independently, optimizations performed within one section program are independent of those performed within another.

Architecture of the parallel compiler :

The compiler is implemented in Common Lisp, and the sequential compiler runs as a Common Lisp process on a single SUN workstation under the UNIX operating system. The compiler consists of four phases:

Phase l: parsing and semantic checking

Phase 2: construction of the flow-graph, local optimization. and computation of global dependencies

Phase 3: software pipelining and code generation

Phase 4: generation of I/O driver code, assembly and post-processing (linking, format conversion for download modules, etc.)

Both the first and last phase are cheap compared to the optimization and code generation phases.

Furthermore, for the current implementation, these phases require global information that depends on all functions in a section. For example, to discover a type mismatch between a function return value and its use at a call site, the semantic checker has to process the complete section program. We therefore decided to only parallelize optimization and code generation. Parsing, semantic checking and assembly are performed sequentially. The structure of the parallel compiler then reflects the structure of the source programs. There exists a hierarchy of processes; the levels of the hierarchy are master level, section level and function level. The tasks of each level are as follows:

Master level : The master level consists of exactly one process, the master that controls the entire compilation. The master process is a C process, it invokes a Common Lip process that parses the Warp program to obtain enough information to set up the parallel compilation. Thus, the master knows the structure of the program and therefore the total number of processes involved in one compilation. As a side effect, if there are any syntax or semantic errors in the program, they are discovered at this time and the compilation is aborted. Once the master has finished, the compilation is complete. The master is invoked by the user.

Section level : Processes on the section level are called section masters, and there is one section master for each section in the source program. Section masters are C processes. Section masters are invoked by the master, which also supplies parse information so that the section masters know how many functions are in the section. A section master controls the processes that perform code generation for the functions within its section. When code has been generated for each function of the section, the section master combines the results so that the parallel compiler produces the same input for the assembly phase as the sequential compiler. Furthermore, the section master process is responsible to combine the diagnostic output that was generated during the compilation of the functions. After this is done, the section master terminates.

Function level : The number of processes on the function level, called function masters, is equal to the total number of functions in the program. Function masters are Common Lisp processes. The task of a function master is to implement phases 2 and 3 of the compiler, that is to optimize and generate code for one function.

A Multi Grain Parallelizing Compilation Scheme for OSCAR (optimally Scheduled Advanced Multiprocessor)

In parallelizing Fortran programs on multiprocessor systems, the loop concurrentization, such as DO-all and Do-across, has so far been used widely. Currently, many types of Do-loops can be concurrentized with support of strong data dependency analysis and program restructuring. To improve the effective performance of the multiprocessor systems, it is important to exploit the coarse grain parallelism among loops, subroutines, and basic blocks and the fine grain parallelism inside a sequential loop and a basic block as well as the medium grain parallelism among iterations in a Do-all and Do-across loop.

In the fine grain parallel processing on a multiprocessor systems, an instruction level grain seems too fine compared with data transfer overhead among the processors. Therefore, parallelism among fine grain tasks, namely, parallelism among statements, has been exploited with the support of a static scheduling algorithm considering data transfer overhead and architectures that allow us efficient synchronizations and efficient data transfers. However, the parallel processing of near fine grain tasks using the static scheduling on multiprocessor system also has problems to handle runtime uncertainties. So a multi grain parallel processing scheme on a multiprocessor system which effectively combines the macro data-flow computation, the loop concurrentization and the near fine grain processing has been proposed. The application of dynamic scheduling to the coarse grain tasks allows us to keep dynamic scheduling overhead relatively small. First the multi-grain parallelising compilation scheme for a multiprocessor system was proposed and then it was implemented on a shared memory multiprocessor system called OSCAR.